The Problem: Running Multiple JAR Files in Parallel

In my project, we have a requirement to schedule and run around 50–100 jobs, where each job simply runs a .jar file.

You might think, “Wait, Databricks supports concurrent runs, right?”

Well, not exactly the way you might assume.

Misunderstood: max_concurrent_runs

You may have seen the max_concurrent_runs option when scheduling a job in Databricks. However, let me clarify:

That setting does not allow different jobs to run in parallel. It just controls how many instances of the same job can run at the same time.

For example:

If you set max_concurrent_runs = 2, and you trigger that job three times, the first two will run in parallel, and the third will be queued until one of the first two completes.

So, ii is not about running 10 different tasks at once — it’s just about parallel runs of the same job.

Goal: Run 10 Different Jobs at a Time

So, how do we run 10 different JAR files in parallel and efficiently utilize our cluster?

Here are two approaches:

✅ Approach 1: Chained Task Groups in a Single Job

You can create a single job with multiple tasks grouped into batches of 10:

First 10 tasks: run in parallel.

Next 10: each depends on one of the first 10.

Then the next 10: each depends on one of the second batch.

And so on…

Example:

Task 1 → 11 → 21 → 31

Task 2 → 12 → 22 → 32

…and so forth.

This way, you always keep only 10 tasks running at once.

It’s simple, and it works well!

⚠️ But there’s a problem…

Let’s say:

Task 1 and 2 take 20 minutes to complete.

Tasks 3 to 10 take just 5 minutes.

Now:

Tasks 11 and 12 can’t start until 1 and 2 are done.

Even though your cluster has free capacity (because tasks 3 to 10 are done), it sits idle.

So while this approach ensures parallelism, it underutilizes the cluster due to rigid task dependencies.

Lets think about another approach

The Better Solution (Approach 2): 100 Jobs + Python Parallelism 💡

Now here’s the better and more dynamic way we implemented.

We thought — okay, instead of creating one job with many tasks, why not:

✅ Create 100 different jobs (each running one .jar)

✅ And then use a Python script to launch them — 10 at a time

Sounds good? Wait, it gets cooler 😎

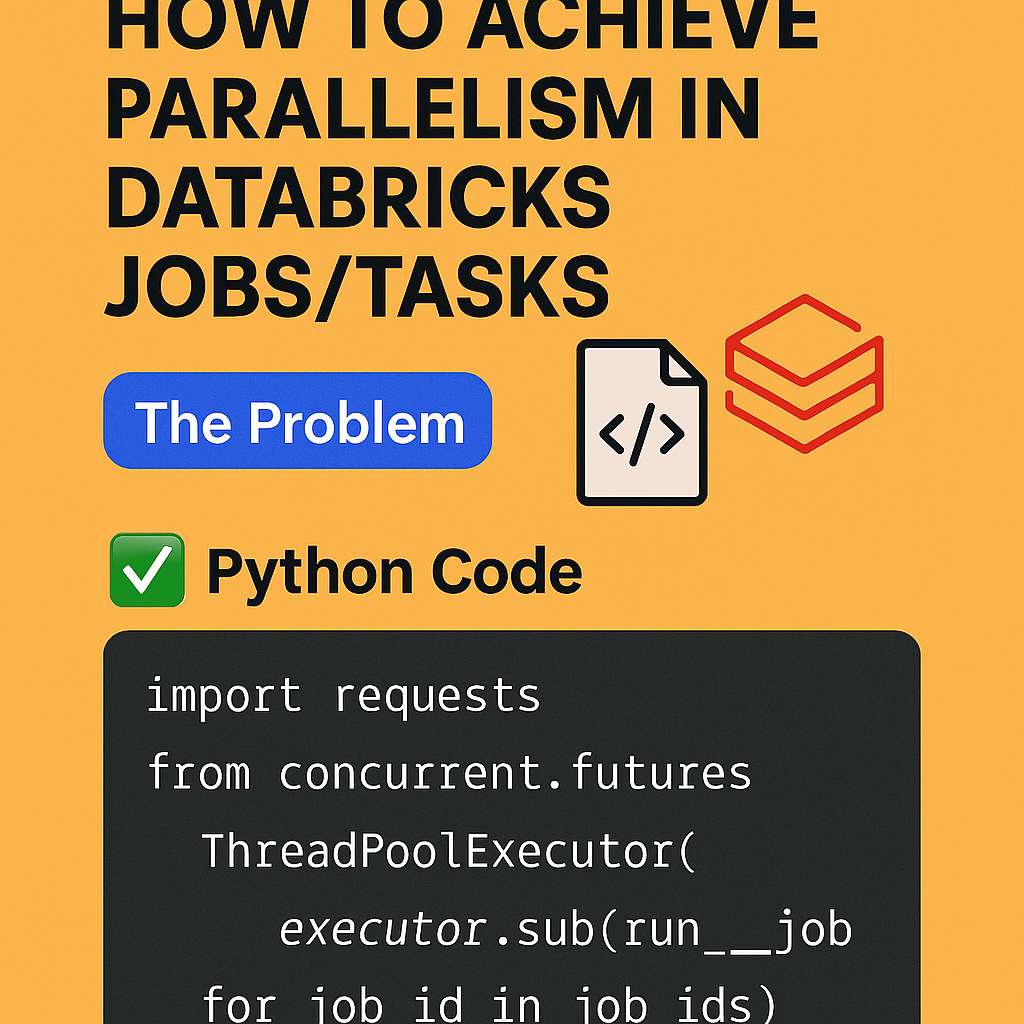

✅ Python Code to Run 100 Jobs in Parallel (10 at a Time)

Here’s the Python script using concurrent.futures:

import requests

from concurrent.futures import ThreadPoolExecutor, as_completed

# Replace these with your actual values

DATABRICKS_INSTANCE = "https://<your-workspace>.azuredatabricks.net"

DATABRICKS_TOKEN = "dapiXXXXXXXXXXXXXXXXXXXXXXX"

HEADERS = {"Authorization": f"Bearer {DATABRICKS_TOKEN}"}

# List of job IDs (already created in Databricks)

job_ids = [101, 102, 103, ..., 200] # total 100 jobs

def run_job(job_id):

url = f"{DATABRICKS_INSTANCE}/api/2.1/jobs/run-now"

payload = {"job_id": job_id}

try:

response = requests.post(url, headers=HEADERS, json=payload)

if response.status_code == 200:

run_id = response.json()["run_id"]

print(f"✅ Job {job_id} started with Run ID {run_id}")

else:

print(f"❌ Failed to start job {job_id}: {response.text}")

except Exception as e:

print(f"💥 Error for job {job_id}: {e}")

# Run jobs in batches of 10 using ThreadPoolExecutor

MAX_PARALLEL = 10

with ThreadPoolExecutor(max_workers=MAX_PARALLEL) as executor:

futures = [executor.submit(run_job, job_id) for job_id in job_ids]

for future in as_completed(futures):

pass # Just wait for all to finishNote this we are doing using data bricks token if you need to how to generate the token and how to run please let me know in comment i will write another blog for it.

Why This Rocks

✅ No need to play with max_concurrent_runs

✅ No complex task dependencies

✅ Works perfectly even if job durations are uneven

✅ Very easy to scale — just change the pool size!

Final Thoughts

So that’s how I learned to handle real parallelism in Databricks — not by using default settings or task dependencies, but by managing it smartly using Python.

Let me know if you want to see how to programmatically create 100 jobs using Python too! because using GUI for creating such no of job is not easy task.

I hope you like it and follow for data world.

Check out my SQL for data engineer series