Azure Databricks is a powerful platform for big data analytics and machine learning, and Azure Data Lake Storage (ADLS) provides a highly scalable and secure data lake for big data analytics. In this blog post, we will explore how to connect Azure Databricks to Azure Data Lake using a Shared Access Signature (SAS) token and how to list the contents of an Azure Data Lake container, specifically focusing on CSV file data.

Prerequisites

- An active Azure subscription.

- Azure Databricks workspace.

- Azure Data Lake Storage Gen2 account with a container.

Step 1: Generate a SAS Token for Azure Data Lake

- Navigate to your Azure Data Lake Storage account in the Azure portal.

- Select the container you want to connect to.

- Click on ‘Generate SAS’ using 3 dot.

- Set the required permissions, expiry time, and other parameters.

- Click ‘Generate SAS token and URL’.

- Copy the SAS token (the part after? in the generated URL).

Step 2: Configure Azure Databricks

- Launch your Azure Databricks workspace.

- Create a new cluster or use an existing one.

Step 3: Connect to Azure Data Lake Storage Using SAS Token

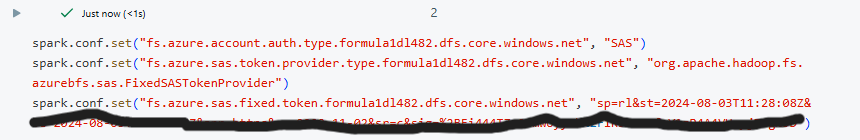

To connect Azure Databricks to your Azure Data Lake Storage using a SAS token, you need to configure the SAS token and use it in your Databricks notebook.

- Open a new Databricks notebook.

- Set the SAS token and the storage account details as follows

spark.conf.set("fs.azure.account.auth.type..dfs.core.windows.net", "SAS")

spark.conf.set("fs.azure.sas.token.provider.type..dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider")

spark.conf.set("fs.azure.sas.fixed.token..dfs.core.windows.net", "SAS_TOKEN")

- with the Azure Storage account name.

- with the Azure Databricks secret scope name.

- with the name of the key containing the Azure storage SAS token.

Below is the example

Step 4: List Contents of the Azure Data Lake Container